Chatbot security risks are now a major concern for cyber professionals, as the use of generative artificial intelligence (AI) and predictive AI tools continues to proliferate around the world.

While the rise of ChatGPT and other AI chatbots has been hailed as a business game-changer, it is increasingly being seen as a critical security issue. Previously, we outlined the challenges created by ChatGPT and other forms of AI.

In this blog post, we look at the growing threat from AI-associated cyber-attacks and discuss new guidance from the National Institute of Standards and Technology (NIST).

The sharp rise in chatbot security risks

From content creation to customer service, AI innovations such as ChatGPT are being hailed as hugely beneficial for businesses and consumers. A recent Kroll survey of senior leaders found that more than half the respondents have turned to AI technology to address a rise in financial crime risks.

While there is little doubt that AI is changing the way businesses operate and provide services, its potential has also been recognised by threat actors. The security risks associated with AI are growing alongside the development of the technology itself. So much so that, in mid-2023, the FBI issued a warning in which it stated that the harm caused by cybercriminals leveraging AI is now increasing at a concerning rate.

In late 2023, a survey highlighted increasing disquiet among security professionals about the use of AI by cybercriminals, with many taking the view that AI is indeed driving up the volume of attacks. A particular area of worry is around the use of deepfakes. However, despite this, many of the professionals surveyed did not seem to fully understand the full extent of AI’s potential impact on cyber security. New research from Microsoft and OpenAI has confirmed that nation states are exploring AI’s capabilities and leveraging large language models (LLMs) such as ChatGPT to support campaigns.

A rise in threats associated with AI has also been the subject of new analysis from the National Cyber Security Centre (NCSC), which warns that it will “almost certainly increase the volume and heighten the impact of cyber attacks” over the next two years, with ransomware in the spotlight as a particularly significant threat. While the use of AI is currently limited to certain types of threat actors, the NCSC assessment also states that between 2024 and 2026, there will be a rise in its use for nefarious purposes by novice cybercriminals, hackers-for-hire and others. This is anticipated to accelerate UK cyber resilience challenges in the near term for the UK government and for businesses.

Adversarial machine learning

The issue is so pressing that NIST recently released a new publication, Adversarial Machine Learning: A Taxonomy and Terminology of Attacks and Mitigations (NIST.AI.100-2). Aimed at individuals and groups responsible for designing, developing, deploying and evaluating AI systems, the report highlights the very real threat presented by AI, outlining the specific types of attacks it can give rise to and how organisations can respond. NIST comments that these defences may not be able to fully mitigate all the threats and that the security community is encouraged to help develop better approaches for addressing growing chatbot security risks. The report is intended to support progress towards developing a taxonomy and terminology of adversarial machine learning (AML) that could help to secure AI applications against manipulation.

As the report highlights, the data-driven approach of machine learning (ML) introduces additional security and privacy challenges, including:

- Adversarial manipulation of training data

- Adversarial exploitation of model vulnerabilities to adversely affect the performance of the AI system

- Malicious manipulations, modifications or interactions with models to exfiltrate sensitive information about people represented in the data, the model or proprietary enterprise data

As the report points out, AML is a key defence in the fight against chatbot security risks because it focuses on understanding the capabilities of attackers and their goals, as well as the design of attack methods that exploit ML vulnerabilities during the development, training and deployment phase of the ML lifecycle. AML also includes the design of ML algorithms that can stand up to the security and privacy threats posed by AI.

Chatbot security attack objectives

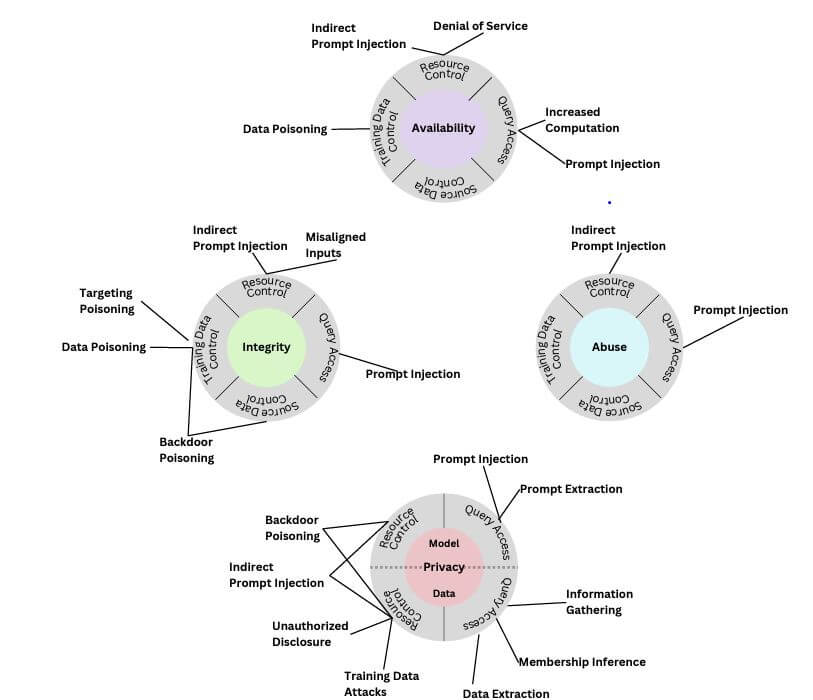

The NIST report classifies AI attackers’ objectives according to three main types of security violations, with adversarial success indicated by achieving one or more of the following goals:

- Availability Breakdown

- Integrity Violations

- Privacy Compromise

Types of chatbot security risks

Image of taxonomy of attacks on generative AI systems from the NIST report: Adversarial Machine Learning: A Taxonomy and Terminology of Attacks and Mitigations (NIST.AI.100-2)

The new NIST report outlines types of attacks in which threat actors both target AI and leverage it to execute other types of cybercrime. NIST classifies these into four specific groups, outlining mitigations for each.

Evasion attacks

Aimed at generating adversarial output after a machine learning model is deployed. The NIST guidance looks at white box and black box types of attacks.

Poisoning attacks

Aimed at targeting the training phase of the algorithm by introducing corrupted data. Types of attacks include:

- Availability poisoning: The entire ML model is corrupted in an availability attack, leading to model misclassification on the majority of testing samples.

- Targeted poisoning: Poisoning attacks against machine learning that change the prediction on a small number of targeted samples

- Backdoor poisoning attacks: Poisoning attacks against machine learning that change the prediction on samples, including a backdoor pattern

- Model poisoning: Model poisoning attacks attempt to directly modify the trained ML model to inject malicious functionality into the model.

Privacy attacks

Aimed at gaining sensitive information about the system or data it was trained on through the use of questions that work around existing guardrails. Types of attacks include:

- Data reconstruction: The ability to recover an individual’s data from released aggregate information

- Membership inference: Aimed at determining whether a particular record or data sample was part of the training dataset used for the statistical or ML algorithm

- Model extraction: Extracts information about the model architecture and parameters by submitting queries to the ML model trained by a machine learning as a service (MLaaS) provider

- Property inference attacks: The attacker attempts to learn global information about the training data distribution by interacting with an ML model.

Abuse attacks

Aimed at compromising legitimate sources of information, such as a web page with incorrect information, to repurpose the system’s intended use through indirect prompt injection in order to execute fraud, malware or manipulation. Types of attacks include phishing, masquerading, malware attacks and historical distortion, in which an attacker can prompt the model to output disinformation. NIST gives the example of researchers who demonstrated this by successfully prompting Bing Chat to deny that Albert Einstein won a Nobel Prize.

For proactive steps to defend against chatbot security risks, check out our post on ChatGPT security.

How Kroll can help

Ensuring effective cyber resilience against the increasing chatbot security risks demands a comprehensive approach to cyber defence. Kroll Responder, our Managed Detection and Response (MDR) service, supplies EDR and other detection technologies, as well as the people and intelligence required to utilise them effectively, to continuously hunt for threats across networks and endpoints and help shut them down before they cause damage and disruption. Functioning as an extension of your IT team, Kroll Responder combines world-class security expertise, leading network and endpoint detection technologies, and aggregated security intelligence to defend against current and emerging security threats, 24/7/365.